| Version 31 (modified by nakasato, 14 years ago) (diff) |

|---|

Matrix Multiply on GPU

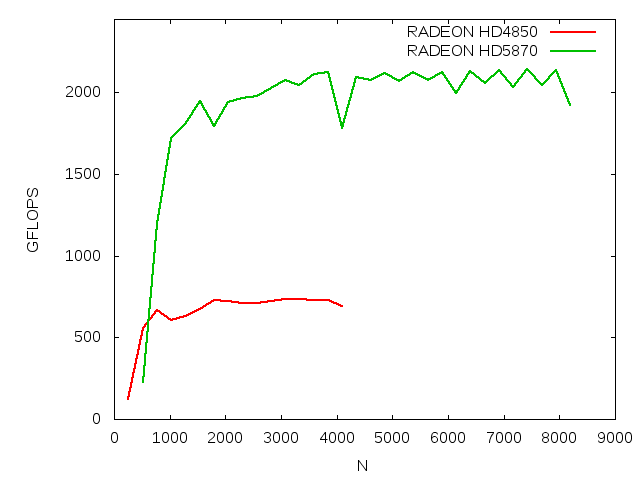

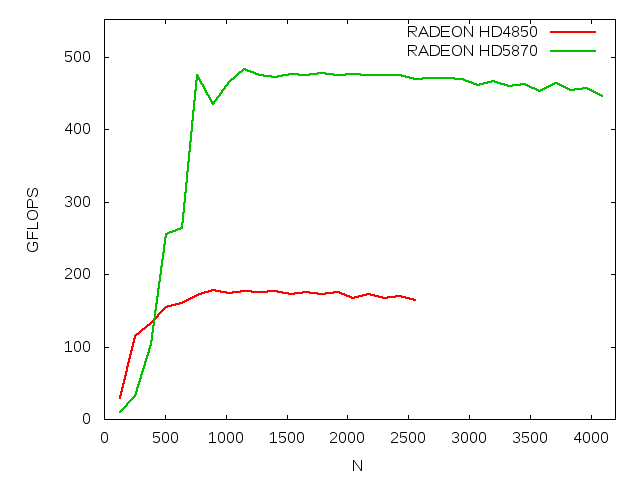

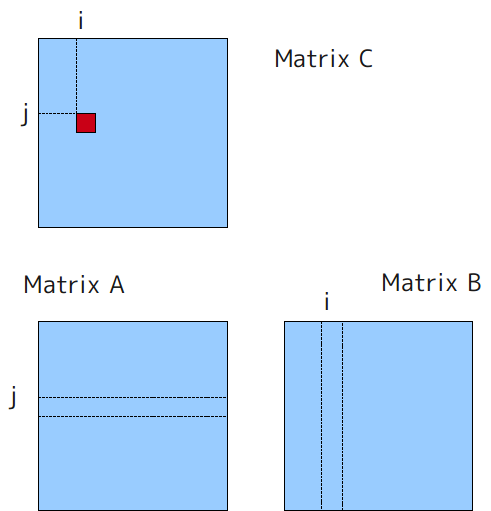

We have implemented single/double precision matrix multiply program for RV770/Cypress. In our implementation, we use two input streams for computing C=AB. One is transposed input matrix A (i.e. column major) and other is input matrix B in normal format (i.e. row major). Output matrix C is also row major. We adopted 8x8 block for single precision and 4x4 for double precision. Here is benchmark result for each case. Note only kernel execution time is measured.

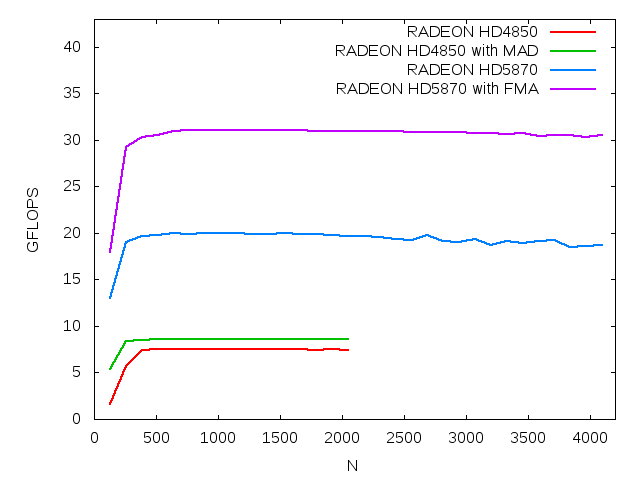

I update double-double (DD) precision performance. We used 2x2 block in this case. On Cypress architecture GPU, we take advantage of FMA_64 instruction. For MAD peak in DD, we assume one DD operation takes 20 DP operations(ops) without FMA and 15 ops with FMA. Precicely, DD add and DD mul without FMA takes ~ 20 ops while DD mul with FMA only takes ~ 8 ops.

Peformance Summary

| board | Pmax | Nmax | prec | reg. usage | MAD peak | note |

| HD4850 | 736 | 3328 | SP | 25 | 1040 | |

| HD5870 | 2140 | 7424 | SP | 25 | 2720 | |

| HD4850 | 177 | 1408 | DP | 19 | 208 | |

| HD5870 | 475 | 2048 | DP | 19 | 544 | |

| HD4850 | 7.5 | 768 | DDP | 21 | ~10.4 | |

| HD5870 | 20 | 1024 | DDP | 21 | ~27.2 | |

| HD5870 | 31 | 1024 | DDP | 18 | ~36.2 | FMA |

Pmax & MAD in GFLOPS

Source code

Will be posted later.

Single precision

Double precision

Double-Double precision

Useful forum discussions

Discussion on a highly optimized MM kernel

http://forum.beyond3d.com/showthread.php?t=54842

Discussion on MM kernels in OpenCL

http://forums.amd.com/devforum/messageview.cfm?catid=390&threadid=127963

IL code generator in C++

CAL++ http://sourceforge.net/projects/calpp/

Meta-programing works in reality. Impressive work!

Attachments (6)

- MM1.png (12.7 KB) - added by nakasato 14 years ago.

- DMM.png (5.0 KB) - added by nakasato 14 years ago.

- SMM.png (5.3 KB) - added by nakasato 14 years ago.

- DDMM.png (5.2 KB) - added by nakasato 14 years ago.

- dis.txt (19.7 KB) - added by nakasato 14 years ago.

- kernel_single.il (2.8 KB) - added by nakasato 14 years ago.

Download all attachments as: .zip